iPhone 11 and its « Deep Fusion » mode leave no doubt that photography is now software.

Everybody in techdom has noticed that Apple Sept 10, 2019 keynote was still deeply focused on the iPhone, and more precisely on what the company calls its « camera system ». The company insisted almost dramatically on the « extraordinary » capabilities of the new product line when it comes to photo and video.

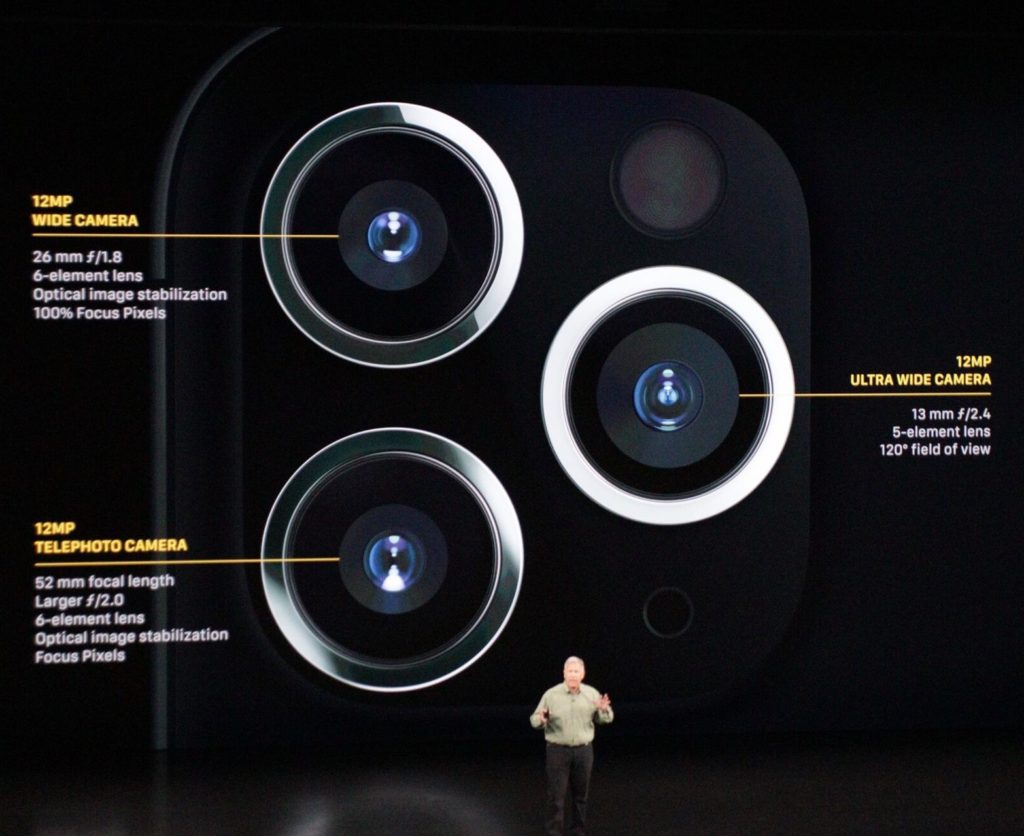

I will focus on the iPhone Pro that brings the most radically (3+ optics are no longer new though) but also seem to cater, in the keynote at least, to the category of « Pro photographers ». Its relies on 3 cameras, that boast « exceptional » features in Apple’s usual jargon, yet the real revolution is in what you can do with them : 6 pro photographers examples were displayed on the screen as a proof. Same with the « totally redesigned » Photos app. Capturing light was one thing, it is now eclipsed by processing pixels.

Still, the most prominent features advocated yesterday were « Night Mode », « Deep Fusion », video shooting modes that all rely on the 2 or 3 cameras and that « pre shoot » before the A13 bionic chip combines and optimizes everything for final rendering.

Capturing was one thing, it is now eclipsed by processing.

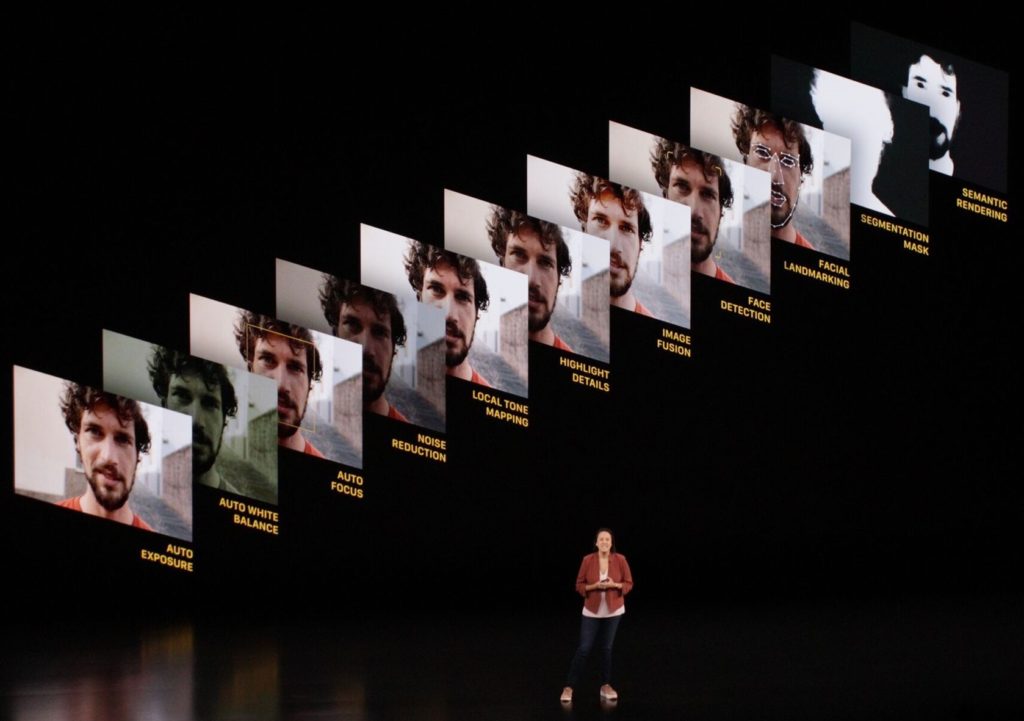

In the case of « Deep Fusion » — that will come, interestingly, as a software update later in November — details unveiled in the keynote mentioned 8 shots being taken just before the button is pressed, then combined with the photo and processed by the A13 bionic chip neural engine to generate, in almost real time, an optimized picture that has been calculated pixel by pixel.

Apple is always scarce on figures ; the tech Kommentariat got frustrated by the absence of mW in the battery life, or the very bizarre chart with no axis that supported the idea that the new in-house A13 bionic chip was the best ever included in any smartphone. Googling deeper across the web does not bring much though : for instance, a similar « 1 Trillion operations per second » claim was already made at the previous iPhoneXS launch, relating the to the previous A12 chip (even if A13 is supposed to have more transistors, deliver more, and consume less though). Setting this aside, and just dividing 1 trillion (as 10^12) by 12 million pixels results in a whopping 83.000 operations per pixel in a second.

Computational photography delivers post-processed images recreated from pre-processed shots, according to an AI « taste ».

Photography has therefore entered a new era where you now take a picture (if the word still has a meaning) first, and manage parameters afterwards such as focus, exposure, depth of field. As a result you do not get « one shot » but are offered an optimized computation among tons of others. In the case of « Deep Fusion », what you receive is a post-processed image that has been totally recreated from pre-processed shots, all of this according to an Artificial Intelligence « taste ».

Now (even) « Pro » photographers will start trading freedom of choice for convenience. Pictures have become shots and I start missing my Ilford rolls. Sometimes.

Disclosure : I modestly contributed to this 10 years ago as I joined imsense, bringing single picture Dynamic Range Processing to the iPhone, and later bringing Apple’s HDR mode to decency. We honestly just wanted to rebalance the light by recovering « eye-fidelity ».

Those “pro” features are not professionals—they fully understand using good lighting, good composition and gear that captures more than a couple of dozen photons in a given pixel—but rather for us ordinary people who would like to believe that a few hundred dollars on a new camera will make OUR shots more like the pros’.

As, indeed, they will. A little bit. The Instagram Pad Thai will be a bit more colorful. The happy grad’s diploma will a bit more legible and less confetti-colored. Darker parts of our shots will magically seem more detailed, yet still faithfully render color. Our shots of friends will seem more 3-dimensional, thanks to post-shot lighting adjustments.

Pros who’ve spent years developing their understanding of lighting, lenses, composition and f-stops will only gain tools that can come close to their old skills. But they’ll all say, “the best camera is the one you have with you” and their casual shots will get better.

The real victim of computational photography would seem to be the higher-end point-and-shoot and “bridge” markets. Up to now, they’ve had big advantages from larger sensors for medium-low light, zoom telephoto capabilities and affordability. Now, these “pro” features that may add $200–$300 to a mid-level phone you’d buy anyway, which is the range for those standalone cameras that you’ll no longer bother to pack even on scenic holiday trips.